Identifying service boundaries in a monolithic API — A Serverless Migration Decision Journal

This is part 4 in the series Migrating a Monolithic SaaS App to Serverless — A Decision Journal.

It’s now been over a week since I deployed API Gateway as a proxy to my legacy API. So far, it’s gone pretty quietly. 😀

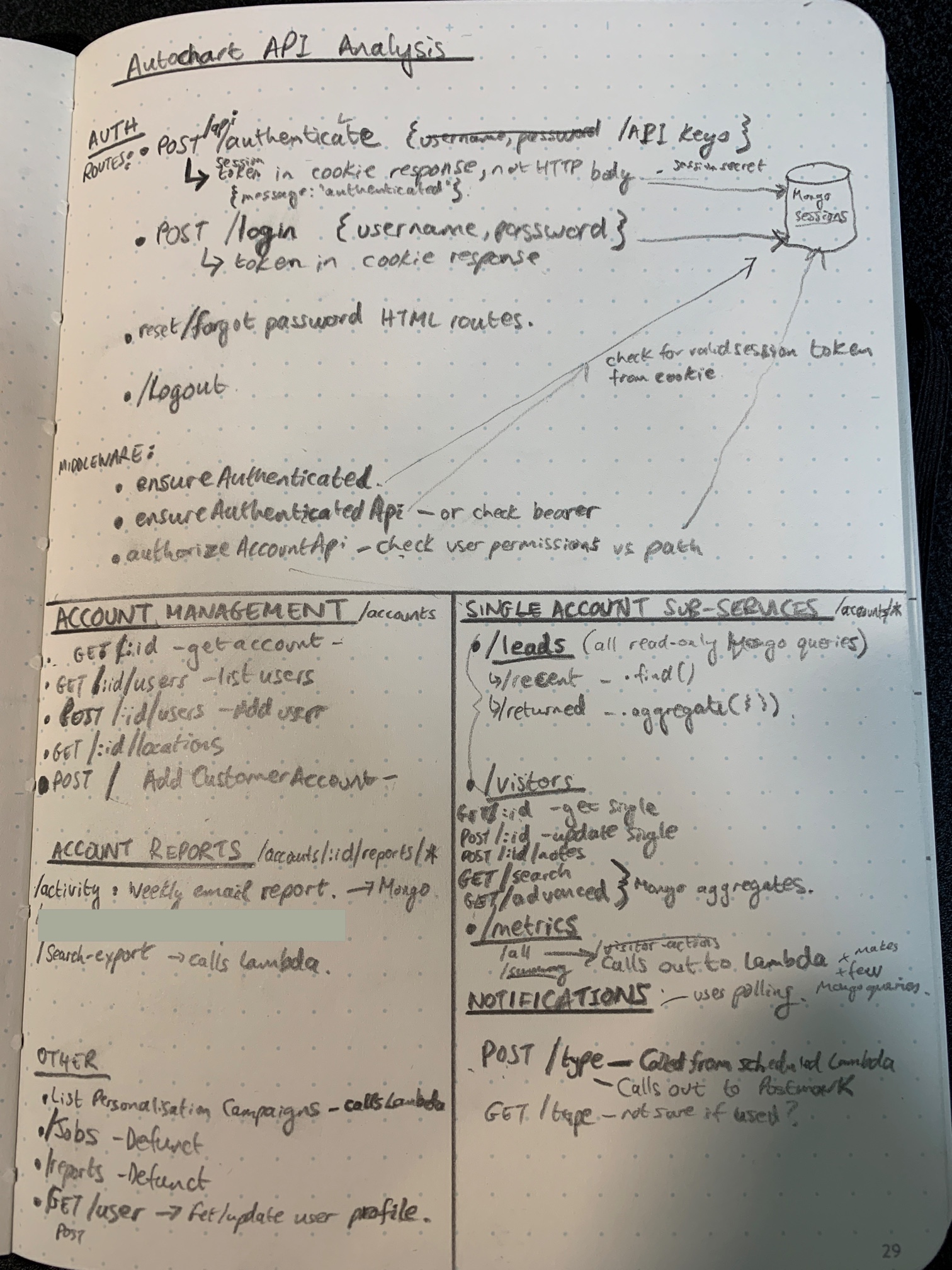

Earlier this week, I performed an analysis of my monolithic Express.js API codebase with a view to identifying service boundaries to help me logically group related routes into their own services and select services which it would make sense to migrate first.

Listed below is what I came up with in terms of discrete services. Against each service, I’ve specified the downstream resources which this service currently depends on, and any specific MongoDB collections (tables) it uses.

Special shout out to Yan Cui here whose article and excellent Production-ready Serverless course I’ve leaned heavily on.

Auth

Registration, login, authorisation and user profile management. The implementation of these routes use PassportJS and is relatively complex. Downstream resources: MongoDB (Collections: Users, Sessions), Postmark.

Customer Account Management

Create customer account, add user to account, change account settings, close account. Downstream resources: MongoDB (Collections: CustomerAccounts, Users).

Visitor Profile

Record visitor activity, fetch visitor profile, attach notes to visitor. This is used by both the end-user portal and by the existing Event Tracking service. Downstream resources: MongoDB (Collections: Visitors, Notifications).

Visitor Search

Search for visitors. Downstream resources: MongoDB (Collections: Visitors).

Reporting

Account-specific on-demand/scheduled reports which run queries against Mongo and return JSON or HTML responses. A weekly activity email report is the main one here. Downstream resources: MongoDB (Collections: Visitors).

Event Metrics

Show aggregated metrics related to visitor activity in the account dashboard page. This is just a single /all route which calls on to existing Lambda and also performs 2 Mongo count queries. Downstream resources: existing Lambda (from Event Tracking service), MongoDB (Collections: Visitors).

Activity Notifications (internal)

This has a couple of routes which are triggered by a Lambda polling job. The current implementation fetches Notification records from MongoDB which were saved whenever a Visitor Profile was saved. It then generates emails for each one and sends notification email using Postmark. Since this is an internal-only API, rather than requiring an API gateway endpoint, this could probably be completely refactored to have visitor profile updates push an event to SNS then Lambda handler gets triggered to do the email send. Downstream resources: MongoDB (Collections: Notifications, Visitors), Postmark.

Front End UI

HTML-serving routes. While most of the front-end is an AngularJS single page application, annoyingly there are a few pages which still use server-side rendering in Express that I’ll need to either migrate to Lambda or update the front-end to make these pages part of the SPA too. Downstream resources: MongoDB (Collections: Users)

I also uncovered several API routes that are now defunct and can just kill off. ✂️

Where to start?

So that’s quite a few services to migrate. Many of these only have 1 or 2 routes but there are differing levels of complexity in each one: some are basic CRUD while others perform more involved orchestrations.

I want to start with the simpler routes that are relatively low-risk but which still get significant traffic. After examining each one, I’m thinking of starting with the Event Metrics service:

- It gets quite a lot of traffic as it’s hit as soon as a user opens the home page of the portal.

- It’s read-only so I don’t need to worry about corrupting data.

- It currently uses Redis Elasticache to cache results and I think I can swap in API Gateway caching here quite nicely.

But before I start implementing the new Event Metrics service there are a few cross-cutting concerns that I need to address:

- Testing and CI/CD — I want each newly migrated route to pass integration and acceptance tests before being deployed into live usage. I plan to set up a pipeline with AWS CodePipeline/CodeBuild.

- Authentication & authorisation — almost all the API routes require the caller to be authenticated and have authorisation for their specific account. This is currently managed by middleware in the Express app which uses the Users table in MongoDB to store user profiles and credentials. Whilst I don’t yet want to migrate the login API route over to Lambda, I do need a way of verifying auth tokens in API requests and checking the user’s authorisation level in the database. I have a few options in mind around potentially using an API Gateway Lambda Authorizer, using a shared middleware library that all Lambda route functions can use, or possibly even a combination of both these approaches.

I will dive into both these areas in my next few posts, so stay tuned.

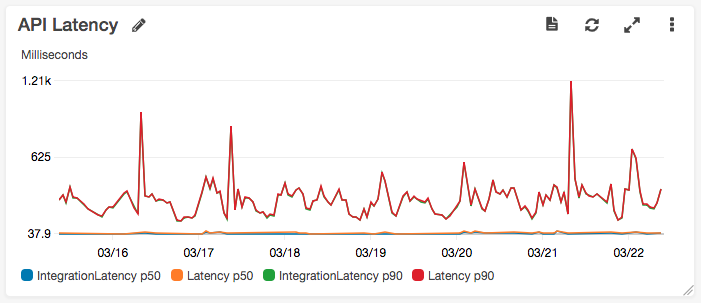

Monitoring Latency

Before finishing off, I want to touch on an operational concern that I neglected to mention last week around latency (thanks to Kyle Galbraith for pointing this out). Specifically, client API requests that haven’t yet been migrated over to Lambda now have an extra network hop to go through. Previously the request path was:

Browser -> ELB (us-east-1) -> EC2 (us-east-1)

whereas now it is:

Browser -> APIGW (edge) -> ELB (us-east-1) -> EC2 (us-east-1)

In the CloudWatch dashboard that the serverless-aws-alerts plugin auto-created for me, I can see the p90 latency of all requests that went through the API Gateway.

I’m not overly worried about these latencies but I do hope to improve them as I replace requests to the legacy ELB with Lambda functions, and also start making use of API Gateway’s caching abilities. The fact that my API Gateway instance is edge-optimised will help given that the vast majority of my customers are based in western Europe. (My initial choice to locate infrastructure in us-east-1 in the hope of conquering the North American market looks a bit silly now 😞)

Going forward, while the above graph depicts latency at an aggregate level, I want to see more granular per-path metrics on latency and errors for each new endpoint that I migrate over to Lambda. To that end, I’m going to trial Thundra during my migration process as I was impressed with its capabilities after a recent demo.

Next Steps

My immediate next steps are:

- Put a CI/CD pipeline and test framework in place to go from dev -> staging -> prod

- Implement authentication and authorisation middleware and test it on a dummy route

- Implement the Lambda for the Event Metrics route that I’ve identified as my first microservice to be migrated

As always, if you’ve any questions or suggestions on my decisions here, I’d love to hear them.

Other articles you might enjoy:

Free Email Course

How to transition your team to a serverless-first mindset

In this 5-day email course, you’ll learn:

- Lesson 1: Why serverless is inevitable

- Lesson 2: How to identify a candidate project for your first serverless application

- Lesson 3: How to compose the building blocks that AWS provides

- Lesson 4: Common mistakes to avoid when building your first serverless application

- Lesson 5: How to break ground on your first serverless project