Deploying API Gateway as a proxy in front of a legacy API — A Serverless Migration Decision Journal

This is part 3 in the series Migrating a Monolithic SaaS App to Serverless — A Decision Journal.

In the last update, I decided to put API Gateway in front of the classic ELB of the legacy app to enable me to gradually migrate routes away from it towards Lambda functions.

In this post, I get started into the implementation. Specifically, I’ll cover:

- Prepping the DNS to make it easier to manage via infrastructure-as-code going forward

- Should I start with a mono-repo or micro-repo approach for Git source control?

- Setting up a test Lambda-backed API endpoint

- How to proxy requests through API Gateway to my legacy back-end

- Setting up basic CloudWatch alerting

- Cutting over production traffic to API Gateway

Prepping the DNS in Route53

A secondary goal I have for this migration is to move away from using a legacy AWS account (autochart-legacy) that mixes resources for both dev, staging and production environments along with those for other unrelated projects/products to dedicated isolated accounts for dev / staging (autochart-dev) and production (autochart-prod).

So that meant migrating the records in the autochart.io Route53 hosted zone from the legacy account into the new autochart-prod production account. The AWS docs have detailed steps on how to do this, so it was relatively painless. At a high-level, this was a 4-step process:

- Use AWS Route53 CLI to export JSON for my hosted zone record sets from

autochart-legacyaccount - Manually tidy up JSON to remove unneeded entries.

- Use AWS Route53 CLI to import JSON record sets into new

autochart-prodaccount - Update the name server records at my domain provider (Namecheap) to point to the hosted zone’s name servers.

I also set up 2 hosted zones in my autochart-dev AWS account for the dev.autochart.io and staging.autochart.io subdomains and created name server records in the root hosted zone in order to delegate DNS to the child hosted zones. This was pretty straightforward following these steps.

Finally, I created a new alias record portal-legacy.autochart.io which points at the same address of the classic load balancer where my production API’s subdomain portal.autochart.io currently points to. This new DNS entry will be used to configure the back-end location for my proxied API Gateway requests, as you’ll see below.

🔥 Tip — in order to test that the new name servers were in effect, I temporarily switched my MacBook DNS from CloudFlare DNS (1.1.1.1) to Google Public DNS (8.8.8.8) as the former seemed to be still caching my old nameservers. I was then able to run a dig command to verify my newly created DNS entry was picked up ok.

Now that I have hosted zones set up in my new accounts, I can use the Serverless Framework (and CloudFormation) to manage my DNS records through infrastructure as code.

Mono-repo or multi-repos?

I’m now ready to get API Gateway set up. I’ll be using the Serverless Framework to do this. So my next question is — where do I put my code?

I know I definitely don’t want to use my existing Git repo for the monolith Express app. I also know that my end goal is to have a set of microservices with clear boundaries, each with its own serverless.yml file. I can see the benefits of enforcing this separation at the repo level.

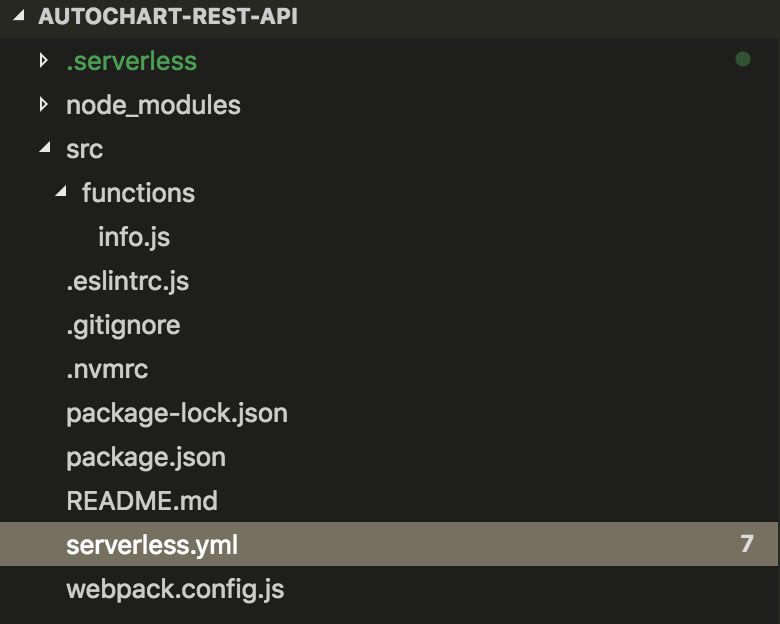

However, I haven’t yet identified these boundaries and everything is still somewhat in flux. I don’t know what code I might need to share between services. So I’m going to start with a single “mono-repo” for now and give it the somewhat generic name of autochart-rest-api.

I’m using a simple folder structure to start off with, but I expect I’ll restructure things as I start identifying service boundaries and implementing Lambda functions.

Setting up API Gateway with my custom domain

Since I want to point my live subdomain (portal.autochart.io) at my API Gateway, I need to configure my API Gateway instance to use a custom domain.

Alex DeBrie has a great post on the Serverless.com blog showing how to do this. To summarise, I did the following:

-

Used the Certificate Manager console to create certificates in my

autochart-devandautochart-prodaccounts. The former had the following subdomains:*.dev.autochart.io dev.autochart.io *.staging.autochart.io staging.autochart.iowhile the latter had these:

*.autochart.io autochart.io - Use the

serverless-domain-managerplugin and its CLI commands to configure and deploy the custom domains using the correct certificates.

Creating a test route

I created a simple “hello world” style Lambda function which will act as the handler for a new test route /api/1/info. Here’s how that looks in serverless.yml:

functions:

info:

handler: src/functions/info.handle

events:

- http: GET ${self:custom.apiRoot}/infoI then deployed this route to the production account. Since I’m not yet ready to route production traffic via the API Gateway, I have configured it to use a temporary custom subdomain of portal2.autochart.io.

I can then test my test route handler with a simple cURL command: curl https://portal2.autochart.io/api/1/info.

Proxying requests through to the legacy backend

I now need to tell API Gateway to proxy all requests that aren’t for the above “info” route through to my legacy ELB backend. To do this, I use what is called “HTTP Proxy Integration” for API Gateway.

This was the step which caused me the most hassle as the AWS docs on this subject didn’t tell me everything I needed to do to get this working. I spent a few hours trying different configs until I eventually found this blog post on setting up an Api Gateway Proxy Resource using Cloudformation by someone who had hit upon the same issue as I was seeing and documented how he fixed it. 😀

Here’s my full serverless.yml configuration so far:

service: ac-rest-api

custom:

stage: ${opt:stage, self:provider.stage}

profile:

dev: autochart_dev

prod: autochart_prod

apiRoot: api/1

domains:

dev: portal.dev.autochart.io

prod: portal2.autochart.io # to be changed to portal.autochart.io after testing

hostedZones:

dev: Z38AD79BYT7000

prod: Z3PI5JUWKKW999

# Automatically create DNS record from `domain` to API Gateway's custom domain?

createRoute53Record:

dev: true

prod: false

# URL where back-end exists

backendUrls:

dev: "https://portal-legacy-staging.autochart.io"

prod: "https://portal-legacy.autochart.io"

# Host header to be passed through to back-end

backendHostHeaders:

dev: "portal-staging.autochart.io"

prod: "portal.autochart.io"

# config for serverless-domain-manager plugin

customDomain:

domainName: ${self:custom.domains.${self:custom.stage}}

stage: ${self:custom.stage}

createRoute53Record: ${self:custom.createRoute53Record.${self:custom.stage}}

hostedZoneId: ${self:custom.hostedZones.${self:custom.stage}}

provider:

name: aws

runtime: nodejs8.10

stage: dev

profile: ${self:custom.profile.${self:custom.stage}}

package:

individually: true

functions:

info:

handler: src/functions/info.handle

events:

- http: GET ${self:custom.apiRoot}/info

resources:

Resources:

ProxyResource:

Type: AWS::ApiGateway::Resource

Properties:

ParentId: !GetAtt ApiGatewayRestApi.RootResourceId

RestApiId: !Ref ApiGatewayRestApi

PathPart: '{proxy+}'

RootMethod:

Type: AWS::ApiGateway::Method

Properties:

ResourceId: !GetAtt ApiGatewayRestApi.RootResourceId

RestApiId: !Ref ApiGatewayRestApi

AuthorizationType: NONE

HttpMethod: ANY

Integration:

Type: HTTP_PROXY

IntegrationHttpMethod: ANY

Uri: ${self:custom.backendUrls.${self:custom.stage}}

PassthroughBehavior: WHEN_NO_MATCH

RequestParameters:

integration.request.header.Host: "'${self:custom.backendHostHeaders.${self:custom.stage}}'"

ProxyMethod:

Type: AWS::ApiGateway::Method

Properties:

ResourceId: !Ref ProxyResource

RestApiId: !Ref ApiGatewayRestApi

AuthorizationType: NONE

HttpMethod: ANY

RequestParameters:

method.request.path.proxy: true

Integration:

Type: HTTP_PROXY

IntegrationHttpMethod: ANY

Uri: ${self:custom.backendUrls.${self:custom.stage}}/{proxy}

PassthroughBehavior: WHEN_NO_MATCH

RequestParameters:

integration.request.path.proxy: 'method.request.path.proxy'

integration.request.header.Host: "'${self:custom.backendHostHeaders.${self:custom.stage}}'"

plugins:

- serverless-pseudo-parameters

- serverless-webpack

- serverless-domain-managerThere are a few important things to note here:

- All the HTTP proxy configuration needs to be written in raw CloudFormation in the

Resourcessection of the file as there are no Serverless plugins (that I could find) that do this yet. - The line

PathPart: '{proxy+}'is how I create a resource that acts as a catch-all handler at the root. - I needed to create a separate

RootMethodas the'{proxy+}'wildcard doesn’t handle the root path. - I needed to set a

integration.request.path.proxyin theRequestParametersin order to map the path from the client request through to the proxied back-end request. - My backend (for legacy reasons) requires a HTTP

Hostheader to match an expected value in order for it to return a valid response from a request. I did this by passing a fixed value to theintegration.request.header.HostRequestParameter.

Testing the proxied requests

After deploying my updated API Gateway configuration to my production account, I again used cURL for some request testing. For example, by running curl -I https://portal2.autochart.io/login I can see that I get the correct response from the backend. I can also see new HTTP headers that API Gateway has added in, prefixed x-amzn-:

› curl -I https://portal2.autochart.io/login

HTTP/2 200

content-type: text/html; charset=utf-8

content-length: 5166

date: Mon, 11 Mar 2019 11:24:23 GMT

x-amzn-requestid: 38d955b4-43f0-11e9-bcb9-9fe9969752ee

x-amzn-remapped-content-length: 5166

x-amzn-remapped-connection: keep-alive

set-cookie: connect.sid=s%3AJusMzBoqngb7-ggEs2MK0rPsxc8Ed4zG.JFQnY8S26GVJV2HtvdnhpMdIW7xNeFD7SvnOsbfuYnY; Path=/; HttpOnly

x-amz-apigw-id: WX_IMHFgIAMF6Iw=

vary: Accept-Encoding

x-amzn-remapped-server: nginx/1.14.0

x-powered-by: Express

etag: W/"142e-3RGZdhc0ZhtNpKgqQqHjwzVK82E"

x-amzn-remapped-date: Mon, 11 Mar 2019 11:24:23 GMT

x-cache: Miss from cloudfront

via: 1.1 bb45ea5b3a4c19db9fecccf1bc9e803d.cloudfront.net (CloudFront)

x-amz-cf-id: mJYOlSyTbkRHUnEZ4tiq0I3ohMIk2jddmBOyiQtOjgX7ZsrNrjbvPw==I also ran a cURL command against my test route to ensure it did NOT get proxied through, and it worked correctly.

Unfortunately, I don’t have an automated end-to-end test suite to run. My existing automated tests in the legacy codebase are either at a unit or integration level and can’t be used here. This is something that I hope to fix as I go through the migration process by writing an end-to-end test for each endpoint being migrated.

Whilst having an end-to-end test suite here would give me more confidence, the combination of the manual cURL and browser testing that I’ve performed has sufficiently reassured me that it’s safe enough to proceed with switching production traffic over.

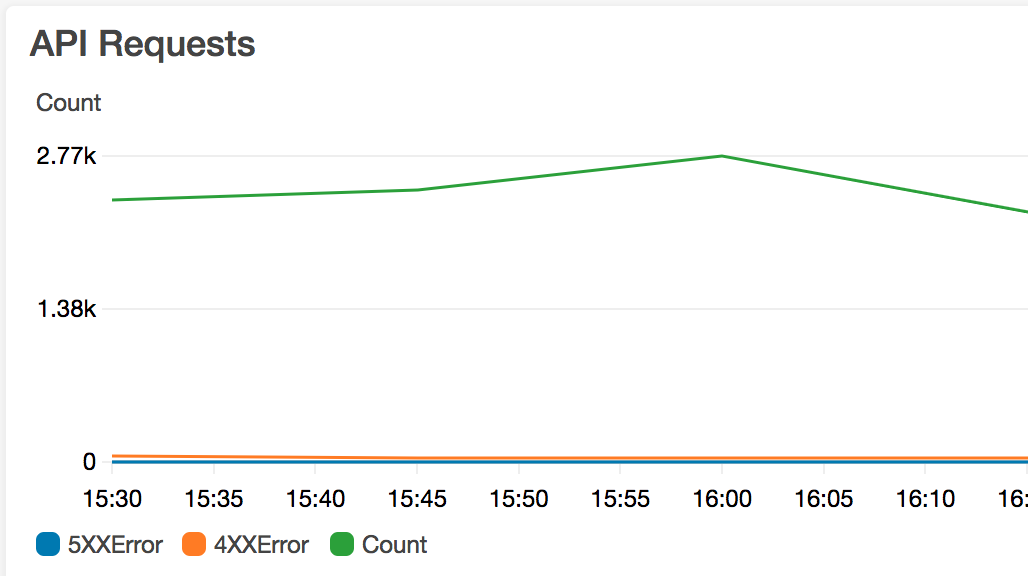

Setting up monitoring

But before I do so, I want to set up some basic alerting using CloudWatch alarms so that I’ll be notified if any errors start happening. I already have this in place for my classic Elastic Load Balancer if it starts seeing regular HTTP 500 errors, so I want to recreate the same level of monitoring here.

To do this, I used the serverless-aws-alerts plugin to set up a single alarm like so:

# Cloudwatch Alarms

alerts:

stages:

- prod

dashboards: true

topics:

alarm:

topic: ${self:service}-${opt:stage}-alerts-alarm

notifications:

- protocol: email

endpoint: myname+alerts@company.com

definitions:

http500ErrorsAlarm:

description: 'High HTTP 500 errors from API Gateway'

namespace: 'AWS/ApiGateway'

metric: 5XXError

threshold: 5

statistic: Sum

period: 60

evaluationPeriods: 3

comparisonOperator: GreaterThanOrEqualToThreshold

treatMissingData: notBreaching

alarms:

- http500ErrorsAlarmThis also generates a nice dashboard for me within the CloudWatch console.

Switching production traffic over to API Gateway

So far, I’ve been using portal2.autochart.io as the custom domain for my API Gateway in the production account. We’re now ready to change this to be portal.autochart.io.

I thought that this would be merely a matter of updating the DNS entry, however it’s not quite so simple.

Whenever I configured API Gateway to use a custom domain (via the serverless-domain-manager plugin’s create_domain command), internally AWS created a CloudFront distribution for holding the SSL certificate and this sits in front of the actual API gateway (that’s why there are CloudFront headers in my above cURL response).

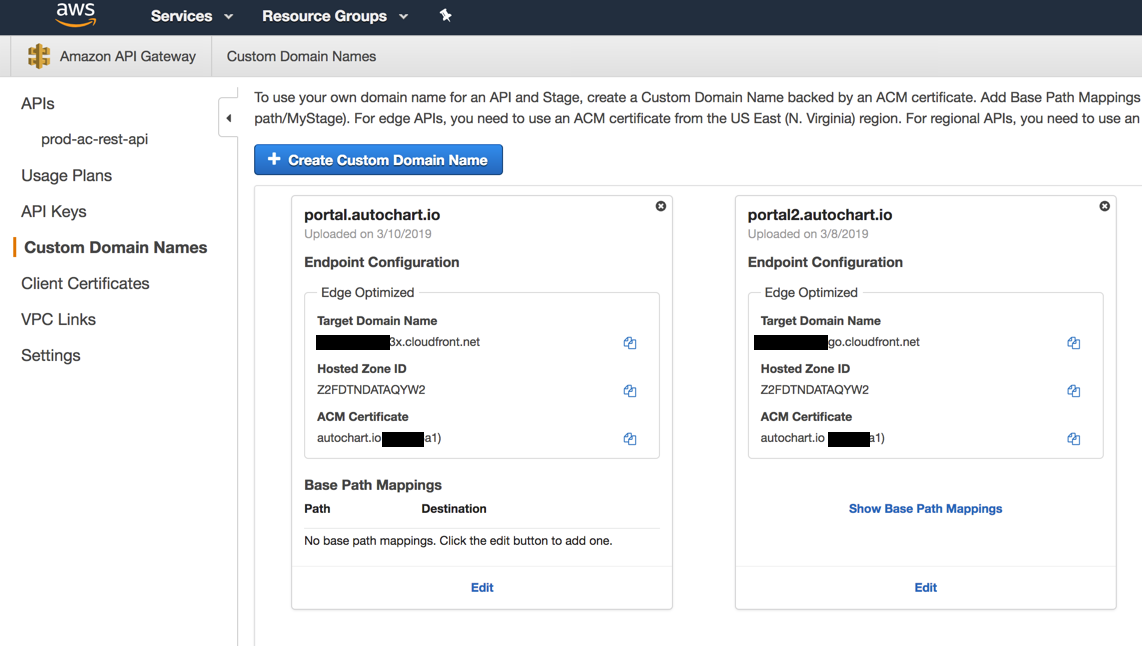

The DNS name of this internal CloudFront distribution is what I need my DNS to point to in order to perform the cutover. However, it seems that this CloudFront distribution is tied to the single portal2 subdomain I initially created it with. Therefore, I now need to run sls create_domain —stage prod again, after I first update my custom.domains.prod serverless.yml entry to be portal.autochart.io. It can take up to 40 minutes for this new custom domain to be created.

Once it’s created, you can see the custom domain names in the API GW console:

I then ran sls deploy --stage prod in order to create the base path mapping for this custom domain.

My final step was to manually update the Route53 DNS record for portal.autochart.io to point to the Target Domain Name of the newly created portal.autochart.io APIGW custom domain name. The API Gateway is now live!

As a quick smoke test, I re-ran the same cURL commands and manual browser tests I did previously. I used the amzn- specific HTTP headers to verify that the DNS had updated and my requests were being served from API Gateway.

So far, all is looking good! 👍

Next steps

Now that I have the API Gateway live and project code structure in place, I can start my analysis of the monolith API to identify what microservices I can extract and thus what routes I can start implementing Lambdas for.

There are a few questions that have come into my mind that I’ll need to address soon, namely:

- I want to put a CI/CD process in place that promotes code from dev => staging => prod (I have only used the dev and prod stages to date). I’ll need to design my approach for doing this and have the process in place before I release my first “real” Lambda function implementation to production.

- Can I do a canary release of each new Lambda for an Api route in order to reduce risk of its introduction? E.g. 5% of requests to this path use the new Lambda while the rest continue to go through the catch-all handler to ELB? I know Lambda supports canary releases for different versions of a function, but I don’t think this would help here.

If you have any suggestions or recommendations on any of these, please let me know.

Other articles you might enjoy:

Free Email Course

How to transition your team to a serverless-first mindset

In this 5-day email course, you’ll learn:

- Lesson 1: Why serverless is inevitable

- Lesson 2: How to identify a candidate project for your first serverless application

- Lesson 3: How to compose the building blocks that AWS provides

- Lesson 4: Common mistakes to avoid when building your first serverless application

- Lesson 5: How to break ground on your first serverless project