Building CICD pipelines for serverless microservices using the AWS CDK

This is part 5 in the series Migrating a Monolithic SaaS App to Serverless — A Decision Journal.

In order to make sure that each new route I migrate over from the legacy Express.js API to Lambda is thoroughly tested before releasing to production, I have started to put a CICD process in place.

My main goals for the CICD process are:

- Each Serverless service will have its own pipeline as each will be deployed independently.

- Merges to master should trigger the main pipeline which will perform tests and deployments through dev to staging to production.

- The CICD pipelines will be hosted in the DEV AWS account but must be able to deploy to other AWS accounts.

- All CICD config will be done via infrastructure-as-code so I can easily setup pipelines for new services as I create them. Console access will be strictly read-only.

I have decided to use AWS CodePipeline and CodeBuild to create the pipelines as they’re serverless and have IAM support built-in that I can use to securely deploy to prod servers. CodePipeline acts as the orchestrator whereas CodeBuild acts as the task runner. Each stage in the CodePipeline pipeline consists of one or more actions which call out to a CodeBuild project that contains the actual commands to create a release package, run tests, do deployment, etc.

Pipeline overview

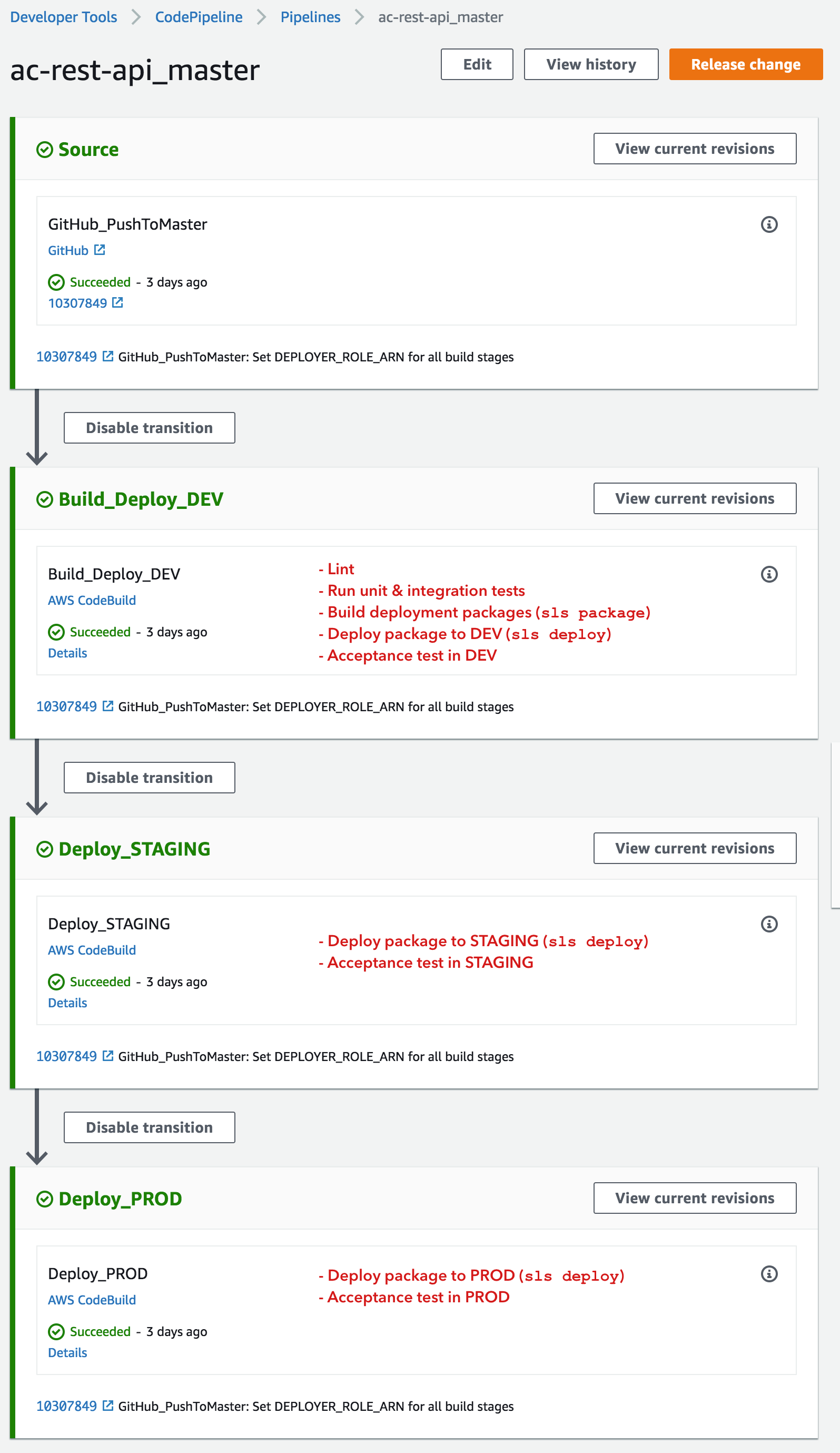

For the first version of my CICD process, I will be deploying a single service ac-rest-api (which currently only contains a single test Lambda function + API GW route). This pipeline will work as follows:

- GitHub source action triggers whenever code is pushed to the master branch of the repo hosting my

ac-rest-apiservice. - Run lint + unit/integration tests, and build deployment package (using

sls packagecommand) - Deploy package (using

sls deploy) to the DEV stage and run acceptance tests against it. - Deploy package to the STAGING stage and run acceptance tests against it.

- Deploy package to the PROD stage and run acceptance tests against it.

Here’s what my completed pipeline looks like:

Managing pipelines as infrastructure-as-code

As with most AWS services, it’s easy to find tutorials on how to create a CodePipeline pipeline using the AWS Console, but difficult to find detailed infrastructure-as-code examples. CodeBuild fairs better in this regard as the docs generally seem to recommend using a buildspec.yml file in your repo to specify the build commands.

Since I will be creating several pipelines for each microservice I’ve identified as I go through the migration process, I want something I can easily reuse.

I considered these options for defining my pipelines:

- Use raw CloudFormation YAML files.

- Use CloudFormation inside the resources section of serverless.yml.

- Use the new AWS Cloud Development Kit (CDK), which allows you to define high-level infrastructure constructs using popular languages (Javascript/Typescript, Java, C#) which generate CloudFormation stacks under-the-hood.

I’m relatively proficient with CloudFormation but I find its dev experience very frustrating and in the past have burned days getting complex CloudFormation stacks up and running. My main gripes with it are:

- The slow feedback loop between authoring and seeing a deployment complete (fail or succeed)

- The lack of templating/modules which makes it difficult to re-use without resorting to copying and pasting a load of boilerplate (I’m aware of tools like Troposphere that help in this regard but I’m a Javascript developer and don’t want to have to learn a new language (Python))

- I always need to have the docs open in a browser tab to see the available properties on a resource

Given this, I decided to proceed with using the CDK which (in theory) should help mitigate all 3 of these complaints.

Creating my CDK app

The CDK provides a CLI that you use to deploy an “app”. An app in this context is effectively a resource tree that you compose using an object-oriented programming model, where each node in the tree is called a “construct”. The CDK provides ready-made constructs for all the main AWS resources and also allows (and encourages) you to write your own. A CDK app contains at least one CloudFormation stack construct, which it uses to instigate the deployment under the hood. So you still get the automatic rollback benefit that CloudFormation gives you.

For more detailed background on what the CDK is and how you can install it and bootstrap your own app, I’d recommended starting here.

I also decided to try out Typescript (instead of plain Javascript) as the CDK was written in Typescript and the strong typing seems to be a good fit for infrastructure resources with complex sets of attributes.

I started by creating a custom top-level construct called ServiceCicdPipelines which acts as a container for all the pipelines for each service I’ll be creating and any supplementary resources. I’ve included all the source code in this gist, but the main elements of it are as follows:

cicd-pipelines-stack: single CloudFormation stack for deploying all the CICD related resources.pipelines[]: List ofServicePipelineobjects — a custom construct which encapsulates the logic for creating a single pipeline for a single Serverless service.alertsTopic: SNS topic which will receive notifications about pipeline errors.

The custom ServicePipeline construct is where most of the logic lies. It takes a ServiceDefinition object as a parameter, which looks like this:

export interface ServiceDefinition {

serviceName: string;

githubRepo: string;

githubOwner: string;

githubTokenSsmPath: string;

/** Permissions that CodeBuild role needs to assume to deploy serverless stack */

deployPermissions: PolicyStatement[];

}This object is where all the unique attributes of the service being deployed are defined. At the moment, I don’t have many options in here but I expect to add to this over time.

The ServicePipeline construct is also where each stage of the pipeline is defined:

export class ServicePipeline extends Construct {

readonly pipeline: Pipeline;

readonly alert: PipelineFailedAlert;

constructor(scope: Construct, id: string, props: ServicePipelineProps) {

super(scope, id);

const pipelineName = `${props.service.serviceName}_${props.sourceTrigger}`;

this.pipeline = new Pipeline(scope, pipelineName, {

pipelineName,

});

// Read Github Oauth token from SSM Parameter Store

// https://docs.aws.amazon.com/codepipeline/latest/userguide/GitHub-rotate-personal-token-CLI.html

const oauth = new SecretParameter(scope, 'GithubPersonalAccessToken', {

ssmParameter: props.service.githubTokenSsmPath,

});

const sourceAction = new GitHubSourceAction({

actionName: props.sourceTrigger === SourceTrigger.PullRequest ? 'GitHub_SubmitPR' : 'GitHub_PushToMaster',

owner: props.service.githubOwner,

repo: props.service.githubRepo,

branch: 'master',

oauthToken: oauth.value,

outputArtifactName: 'SourceOutput',

});

this.pipeline.addStage({

name: 'Source',

actions: [sourceAction],

});

// Create stages for DEV => STAGING => PROD.

// Each stage defines its own steps in its own build file

const buildProject = new ServiceCodebuildProject(this.pipeline, 'buildProject', {

projectName: `${pipelineName}_dev`,

buildSpec: 'buildspec.dev.yml',

deployerRoleArn: CrossAccountDeploymentRole.getRoleArnForService(

props.service.serviceName, 'dev', deploymentTargetAccounts.dev.accountId,

),

});

const buildAction = buildProject.project.toCodePipelineBuildAction({

actionName: 'Build_Deploy_DEV',

inputArtifact: sourceAction.outputArtifact,

outputArtifactName: 'sourceOutput',

additionalOutputArtifactNames: [

'devPackage',

'stagingPackage',

'prodPackage',

],

});

this.pipeline.addStage({

name: 'Build_Deploy_DEV',

actions: [buildAction],

});

const stagingProject = new ServiceCodebuildProject(this.pipeline, 'deploy-staging', {

projectName: `${pipelineName}_staging`,

buildSpec: 'buildspec.staging.yml',

deployerRoleArn: CrossAccountDeploymentRole.getRoleArnForService(

props.service.serviceName, 'staging', deploymentTargetAccounts.staging.accountId,

),

});

const stagingAction = stagingProject.project.toCodePipelineBuildAction({

actionName: 'Deploy_STAGING',

inputArtifact: sourceAction.outputArtifact,

additionalInputArtifacts: [

buildAction.additionalOutputArtifact('stagingPackage'),

],

});

this.pipeline.addStage({

name: 'Deploy_STAGING',

actions: [stagingAction],

});

// Prod stage requires cross-account access as codebuild isn't running in same account

const prodProject = new ServiceCodebuildProject(this.pipeline, 'deploy-prod', {

projectName: `${pipelineName}_prod`,

buildSpec: 'buildspec.prod.yml',

deployerRoleArn: CrossAccountDeploymentRole.getRoleArnForService(

props.service.serviceName, 'prod', deploymentTargetAccounts.prod.accountId,

),

});

const prodAction = prodProject.project.toCodePipelineBuildAction({

actionName: 'Deploy_PROD',

inputArtifact: sourceAction.outputArtifact,

additionalInputArtifacts: [

buildAction.additionalOutputArtifact('prodPackage'),

],

});

this.pipeline.addStage({

name: 'Deploy_PROD',

actions: [prodAction],

});

// Wire up pipeline error notifications

if (props.alertsTopic) {

this.alert = new PipelineFailedAlert(this, 'pipeline-failed-alert', {

pipeline: this.pipeline,

alertsTopic: props.alertsTopic,

});

}

}

}

export interface ServicePipelineProps {

/** Information about service to be built & deployed (source repo, etc) */

service: ServiceDefinition;

/** Trigger on PR or Master merge? */

sourceTrigger: SourceTrigger;

/** Account details for where this service will be deployed to */

deploymentTargetAccounts: DeploymentTargetAccounts;

/** Optional SNS topic to send pipeline failure notifications to */

alertsTopic?: Topic;

}

/** Wrapper around the CodeBuild Project to set standard props and create IAM role */

export class ServiceCodebuildProject extends Construct {

readonly buildRole: Role;

readonly project: Project;

constructor(scope: Construct, id: string, props: ServiceCodebuildActionProps) {

super(scope, id);

this.buildRole = new ServiceDeployerRole(this, 'project-role', {

deployerRoleArn: props.deployerRoleArn,

}).buildRole;

this.project = new Project(this, 'build-project', {

projectName: props.projectName,

timeout: 10, // minutes

environment: {

buildImage: LinuxBuildImage.UBUNTU_14_04_NODEJS_8_11_0,

},

source: new CodePipelineSource(),

buildSpec: props.buildSpec || 'buildspec.yml',

role: this.buildRole,

});

}

}Quick note on pipeline code organisation

I created all these CDK custom constructs within a shared autochart-infrastructure repo which I use to define shared, cross-cutting infrastructure resources that are used by multiple services.

The buildspec files that I cover below live in the same repository as their service (which is how it should be). I’m not totally happy with having the pipeline definition and the build scripts in separate repos and I will probably look at a way of moving my reusable CDK constructs into a shared library and have the source for the CDK apps defined in the service-specific repos while referencing this library.

Writing the CodeBuild scripts

In the above pipeline definition code, you may have noticed that each deployment stage has its own buildspec file (using the naming convention buildspec.<stage>.yml). I want to minimise the amount of logic within the pipeline itself and keep it solely responsible for orchestration. All the logic will live in the build scripts.

Building and deploying to DEV stage

As soon as a push occurs to the master branch in Github, the next pipeline step is to run tests, package and deploy to the DEV stage. This is shown below:

# buildspec.dev.yml

version: 0.2

env:

variables:

TARGET_REGION: us-east-1

SLS_DEBUG: ''

DEPLOYER_ROLE_ARN: 'arn:aws:iam::<dev_account_id>:role/ac-rest-api-dev-deployer-role'

phases:

pre_build:

commands:

- chmod +x build.sh

- ./build.sh install

build:

commands:

# Do some local testing

- ./build.sh test-unit

- ./build.sh test-integration

# Create separate packages for each target environment

- ./build.sh clean

- ./build.sh package dev $TARGET_REGION

- ./build.sh package staging $TARGET_REGION

- ./build.sh package prod $TARGET_REGION

# Deploy to DEV and run acceptance tests there

- ./build.sh deploy dev $TARGET_REGION dist/dev

- ./build.sh test-acceptance dev

artifacts:

files:

- '**/*'

secondary-artifacts:

devPackage:

base-directory: ./dist/dev

files:

- '**/*'

stagingPackage:

base-directory: ./dist/staging

files:

- '**/*'

prodPackage:

base-directory: ./dist/prod

files:

- '**/*'There are a few things to note here:

- A

DEPLOYER_ROLE_ARNenvironment variable is required which defines a pre-existing IAM role which has the permissions to perform the deployment steps in the target account. We will cover how to set this up later. - Each build command references a

build.shfile. The reason for this indirection is to make it easier to test the build scripts during development time, as I’ve no way of invoking a buildspec file locally (this is based on the approach Yan Cui recommends in the CI/CD module of his Production-Ready Serverless course) - I run unit and integration tests before doing the packaging. This runs the Lambda function code locally (in the CodeBuild container) and not via AWS Lambda, though the integration tests may hit existing downstream AWS resources which functions call on to.

- I am creating 3 deployment artifacts here, one for each stage, and including them as output artifacts which can be used by the future stages . I wasn’t totally happy with this as it violates best Devops practice of having a single immutable artifact flow through each stage. My reason for doing this was that the

sls packagecommand that the build.sh file uses requires a specific stage to be provided to it. However, I’ve since learned that there is a workaround for this, so I will probably change this soon. - I run acceptance tests (which hit the newly deployed API Gateway endpoints) as a final step.

The build.shfile is below:

#!/bin/bash

set -e

set -o pipefail

instruction()

{

echo "usage: ./build.sh package <stage> <region>"

echo ""

echo "/build.sh deploy <stage> <region> <pkg_dir>"

echo ""

echo "/build.sh test-<test_type> <stage>"

}

assume_role() {

if [ -n "$DEPLOYER_ROLE_ARN" ]; then

echo "Assuming role $DEPLOYER_ROLE_ARN ..."

CREDS=$(aws sts assume-role --role-arn $DEPLOYER_ROLE_ARN \

--role-session-name my-sls-session --out json)

echo $CREDS > temp_creds.json

export AWS_ACCESS_KEY_ID=$(node -p "require('./temp_creds.json').Credentials.AccessKeyId")

export AWS_SECRET_ACCESS_KEY=$(node -p "require('./temp_creds.json').Credentials.SecretAccessKey")

export AWS_SESSION_TOKEN=$(node -p "require('./temp_creds.json').Credentials.SessionToken")

aws sts get-caller-identity

fi

}

unassume_role() {

unset AWS_ACCESS_KEY_ID

unset AWS_SECRET_ACCESS_KEY

unset AWS_SESSION_TOKEN

}

if [ $# -eq 0 ]; then

instruction

exit 1

elif [ "$1" = "install" ] && [ $# -eq 1 ]; then

npm install

elif [ "$1" = "test-unit" ] && [ $# -eq 1 ]; then

npm run lint

npm run test

elif [ "$1" = "test-integration" ] && [ $# -eq 1 ]; then

echo "Running INTEGRATION tests..."

npm run test-integration

echo "INTEGRATION tests complete."

elif [ "$1" = "clean" ] && [ $# -eq 1 ]; then

rm -rf ./dist

elif [ "$1" = "package" ] && [ $# -eq 3 ]; then

STAGE=$2

REGION=$3

'node_modules/.bin/sls' package -s $STAGE -p "./dist/${STAGE}" -r $REGION

elif [ "$1" = "deploy" ] && [ $# -eq 4 ]; then

STAGE=$2

REGION=$3

ARTIFACT_FOLDER=$4

echo "Deploying from ARTIFACT_FOLDER=${ARTIFACT_FOLDER}"

assume_role

# 'node_modules/.bin/sls' create_domain -s $STAGE -r $REGION

echo "Deploying service to stage $STAGE..."

'node_modules/.bin/sls' deploy --force -s $STAGE -r $REGION -p $ARTIFACT_FOLDER

unassume_role

elif [ "$1" = "test-acceptance" ] && [ $# -eq 2 ]; then

STAGE=$2

echo "Running ACCEPTANCE tests for stage $STAGE..."

STAGE=$STAGE npm run test-acceptance

echo "ACCEPTANCE tests complete."

elif [ "$1" = "clearcreds" ] && [ $# -eq 1 ]; then

unassume_role

rm -f ./temp_creds.json

else

instruction

exit 1

fiThe main thing to note in the above script is the assume_role function which gets called before the deploy command. In order for CodeBuild to deploy to a different AWS account, the sls deploy command of the serverless framework needs to be running as a role defined in the target account. To do this, the Codebuild IAM role (which is running in the DEV account) needs to assume this role. I’m currently doing this by invoking the aws sts assume-role command of the AWS CLI to get temporary credentials for this deployment role. However, this seems messy, so if anyone knows a cleaner way of doing this, I’d love to hear it.

Deploying to Staging and Production

The script for deploying and running tests against staging is similar to the dev script, but simpler:

# buildspec.staging.yml

version: 0.2

env:

variables:

TARGET_REGION: us-east-1

SLS_DEBUG: '*'

DEPLOYER_ROLE_ARN: 'arn:aws:iam::<staging_account_id>:role/ac-rest-api-staging-deployer-role'

# Deploy to STAGING stage and run acceptance tests.

phases:

pre_build:

commands:

- chmod +x build.sh

- ./build.sh install

build:

commands:

- ./build.sh deploy staging $TARGET_REGION $CODEBUILD_SRC_DIR_stagingPackage

- ./build.sh test-acceptance stagingThe key things to note here are:

- I use the

$CODEBUILD_SRC_DIR_stagingPackageenvironment variable to access the directory where the output artifact namedstagingPackagefrom the last pipeline step is located.

The buildspec.prod.yml file is pretty much the same as the staging one, except it references the production IAM role and artefact source directory.

Deployment Permissions and Cross-account access

This was probably the hardest part of the whole process. As I mentioned above, the deployment script assumes a pre-existing deployer-role IAM role that exists in the AWS account of the stage being deployed to.

To set up these roles, I again used the CDK to define a custom construct CrossAccountDeploymentRole as follows:

export interface CrossAccountDeploymentRoleProps {

serviceName: string;

/** account ID where CodePipeline/CodeBuild is hosted */

deployingAccountId: string;

/** stage for which this role is being created */

targetStageName: string;

/** Permissions that deployer needs to assume to deploy stack */

deployPermissions: PolicyStatement[];

}

/**

* Creates an IAM role to allow for cross-account deployment of a service's resources.

*/

export class CrossAccountDeploymentRole extends Construct {

public static getRoleNameForService(serviceName: string, stage: string): string {

return `${serviceName}-${stage}-deployer-role`;

}

public static getRoleArnForService(serviceName: string, stage: string, accountId: string): string {

return `arn:aws:iam::${accountId}:role/${CrossAccountDeploymentRole.getRoleNameForService(serviceName, stage)}`;

}

readonly deployerRole: Role;

readonly deployerPolicy: Policy;

readonly roleName: string;

public constructor(parent: Construct, id: string, props: CrossAccountDeploymentRoleProps) {

super(parent, id);

this.roleName = CrossAccountDeploymentRole.getRoleNameForService(props.serviceName, props.targetStageName);

// Cross-account assume role

// https://awslabs.github.io/aws-cdk/refs/_aws-cdk_aws-iam.html#configuring-an-externalid

this.deployerRole = new Role(this, 'deployerRole', {

roleName: this.roleName,

assumedBy: new AccountPrincipal(props.deployingAccountId),

});

const passrole = new PolicyStatement(PolicyStatementEffect.Allow)

.addActions(

'iam:PassRole',

).addAllResources();

this.deployerPolicy = new Policy(this, 'deployerPolicy', {

policyName: `${this.roleName}-policy`,

statements: [passrole, ...props.deployPermissions],

});

this.deployerPolicy.attachToRole(this.deployerRole);

}

}The props object that is passed to the constructor contains the specific requirements of the service. The main thing here is the deployPermissions which is a set of IAM policy statements to enable the user running the Serverless framework’s sis deploy command to deploy all the necessary resources (Cloudformation stacks, Lambda functions, etc).

An important thing to note is that this set of permissions is different from the runtime permissions your serverless service needs to execute. Working out what deploy-time IAM permissions a service needs is a well-known problem in the serverless space and one that I haven’t yet found a nice solution for. I initially started by giving my role admin access until I got the end-to-end pipeline working, and then worked backwards to add more granular permissions, but this involved a lot of trial and error.

For more information on how IAMs work with the Serverless framework, I’d recommend reading The ABCs of IAM: Managing permissions with Serverless.

A few tips for building and testing your own pipelines

- Always run the build.sh file locally first to make sure it works on your machine

- Use the “Release Change” button in the CodePipeline console in order to start a new pipeline execution without resorting to pushing dummy commits to Github.

Future Enhancements

Going forward, here are a few additions I’d like to make to my CICD process:

- Add a manual approval step before deploying to PROD.

- Add an automatic rollback action if acceptance tests against the PROD deployment fail.

- Creations/updates of Pull Requests should trigger a shorter test-only pipeline. This will help to identify integration issues earlier.

- Slack integration for build notifications.

- Update my package step so that it only builds a single immutable package that will be deployed to all environments.

- Get extra meta, and have a pipeline for the CICD code 🤯. Changes to my pipeline will have tests run against them before deploying a new pipeline.

Next Steps

Next up in my migration plan is getting an auth mechanism built into API Gateway that new routes can use. That will be the first real production code being tested by my new CICD pipeline.

Other articles you might enjoy:

Free Email Course

How to transition your team to a serverless-first mindset

In this 5-day email course, you’ll learn:

- Lesson 1: Why serverless is inevitable

- Lesson 2: How to identify a candidate project for your first serverless application

- Lesson 3: How to compose the building blocks that AWS provides

- Lesson 4: Common mistakes to avoid when building your first serverless application

- Lesson 5: How to break ground on your first serverless project