Optimising for the right thing at the right time

In traditional server-based systems, the architecture of an application that has hit the big-time in terms of users will probably look very different from how it started out — servers scaled up and out, cache servers added, sharding introduced, maybe even an entirely new database swapped in to cope with the new load requirements.

With serverless architectures on the other hand, the built-in autoscaling means that it’s much easier to design your system with scale in mind right from the start and things shouldn’t need to change too much as you grow. And because it “scales to zero”, you’re not paying for this extra elasticity in the early days.

This is undoubtedly a very good thing.

But there is a subtle negative side effect of this that I’ve started to notice (both in myself and others) when discussing the design of a new serverless application.

We now have this powerful suite of autoscalable services at our disposal, and this is pushing scaling-related optimisations to the very forefront of our minds. But this can sometimes be to the detriment of other important factors that also affect the overall Total Cost of Ownership of a system, such as the ability of engineers to reason about an architectural component or piece of code.

DynamoDB single table data modelling is a prime example of this. It’s a hugely powerful technique (that I use myself in many projects) but it does undoubtedly add a layer of complexity to your system.

The measurability of the serverless pricing model can exacerbate this tendency. It’s much easier to estimate the potential billing savings of reducing the round-trips to your DynamoDB table than it is to gauge the fuzzier impact of this optimisation on engineers working with the database on an ongoing basis.

As the architect designing a solution, no-one on the team will understand it as well as we do. We therefore have a subconscious bias to under-estimate the “understandability” factor. We think that if we understand it well, then others will be able to also.

But often we don’t think far enough in terms of who this could impact. Take the example of optimising your DynamoDB table to use shorter attribute names. Back-end engineers will be implementing this, but front-end devs or QA engineers may need to get direct access to the database in dev or test environments in order to find, add or edit test data. Will they be able to navigate the data ok to locate what they’re looking for and apply the necessary updates?

So, when faced with an optimisation option that seems to pull at different ends of the scalability–understandability rope, how do you make a decision on whether to proceed with it?

Here are a few questions that you can ask yourself to help:

- What is the effort involved in implementing this optimisation now?

- Do the math and calculate the expected improvements that this would deliver (in terms of throughput, response time, billing cost savings, etc).

- When do you realistically see the usage levels you’ve estimated for being hit in production?

- What team members will this affect in terms of understandability? Back-end devs, front-end devs, QAs, ops engineers?

- Are there any methods you could use to make a good-enough guess at the negative impacts of this optimisation?

- What would be the cost of introducing this optimisation at a later stage (when a certain level of scale has been reached)? Would it involve a total architectural restructuring or just a few small code changes/scripts run?

If, after all this, I’m still not sure or the billing cost savings or increased performance benefits aren’t pronounced, I will always default to the side of understandability.

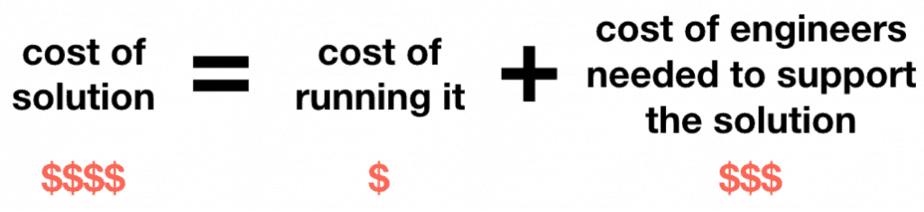

I come from a background of application development rather than operations and scaling, so maybe that’s my experience bias showing through. But whenever serverless costs come up, I almost always keep coming back to this equation:

Be very wary of giving your engineers more work to do in the long term in the name of “cost savings”.

— Paul

Other articles you might enjoy:

Free Email Course

How to transition your team to a serverless-first mindset

In this 5-day email course, you’ll learn:

- Lesson 1: Why serverless is inevitable

- Lesson 2: How to identify a candidate project for your first serverless application

- Lesson 3: How to compose the building blocks that AWS provides

- Lesson 4: Common mistakes to avoid when building your first serverless application

- Lesson 5: How to break ground on your first serverless project