Production-fidelity developer testing

When thinking about testing serverless architectures, it’s tempting to focus on the hard parts—async workflows, local limitations, etc.

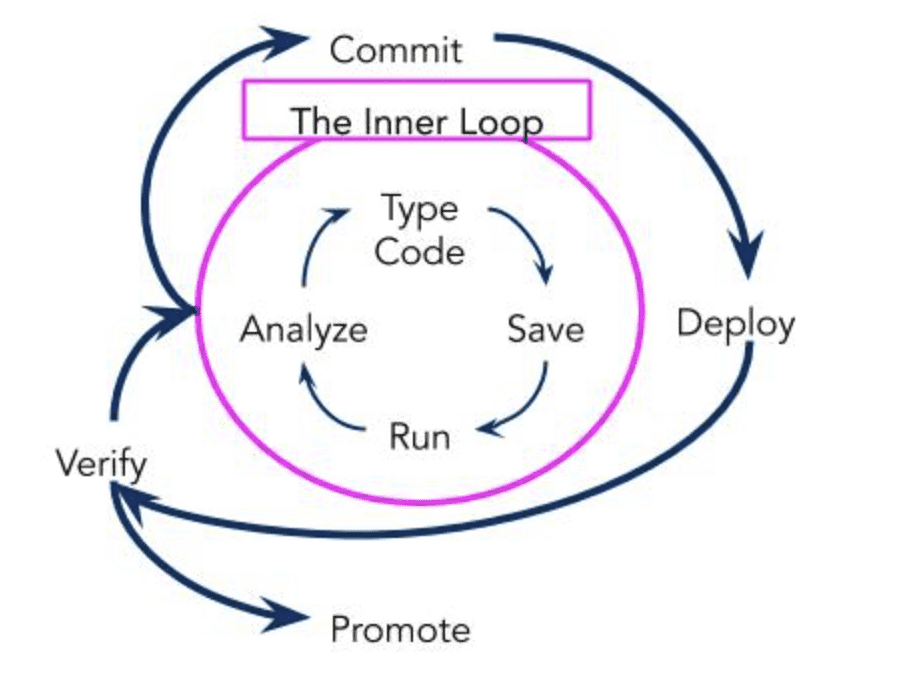

But a rarely mentioned benefit of serverless is that it enables us to shift left on testing. The discovery of many categories of issues can be brought forward into earlier stages of the development lifecycle, even to within the Inner Loop of an individual developer’s environment:

The overarching goal for any form of pre-production testing is to provide confidence that the system will behave as expected in production when a new version of it is released. And how better to do this than to run our pre-production tests in an environment as close as possible in fidelity to production?

With traditional server-based architectures, provisioning truly production-like environments was an expensive process, both in terms of infrastructure cost and engineering time. This led to compromises often resulting in significant differences between pre-production testing environments and production itself (e.g. databases running on single nodes instead of clusters and web servers not using SSL certificates).

What’s more, the effort to automate the provisioning and configuration of the infrastructure was usually much greater for server-based architectures. The end result of all this is that an automated test suite which passed in a pre-production testing environment, while still valuable, still left the door open for many defects slipping through due to the large delta in environments.

On the other hand, with serverless applications we have the opportunity to create production-close environments much earlier in the lifecycle of a change. Infrastructure-as-code frameworks can define the entire system within the developer’s Git repo. Cloud resources can be provisioned within a few minutes or less (usually using the same config as they have in production) and the pay-per-use pricing model means their cost to run is zero or negligible.

Let’s look at what can constitute the delta between two environments for a given AWS serverless application:

- Runtime application code — code itself will be the same but it may be packaged differently. E.g. bundlers that do transpilation or treeshaking

- Runtime code environment, e.g. local workstation vs build container (CodeBuild/GitHub Actions) vs Lambda

- Infrastructure resource implementations, e.g. real DynamoDB vs DynamoDB Local vs fake/stub

- Infrastructure resource configuration, e.g. your DynamoDB GSI definition, IAM roles/policies

- AWS account-level configuration, e.g. service policies, boundary permissions, soft limits

- Pre-existing data (in databases, user pools, in-flight queue messages, etc)

- Temporal traffic patterns

Reading through this list, while there are still a few areas where it’s not feasible for pre-production environments to fully mirror production (traffic, data), we can get them much closer. And as cloud services and tools progress (faster deployment times, etc), this gap will close further.

If you’re trying to make the case for investing in better testing of serverless applications in your organisation, the benefits brought from early-stage production-fidelity testing is a good place to start.

—Paul

Paul Swail

Indie Cloud Consultant helping small teams learn and build with serverless.

Learn more how I can help you here.

Join daily email list

I publish short emails like this on building software with serverless on a daily-ish basis. They’re casual, easy to digest, and sometimes thought-provoking. If daily is too much, you can also join my less frequent newsletter to get updates on new longer-form articles.