Scaling Lambdas inside a VPC

So you’re building a Lambda function and you need it to talk to resources inside your VPC, maybe an RDS database or a Redis Elasticache.

You’ve heard VPC Lambda functions are more complicated than standard functions and are finding it difficult to get the full picture from the AWS documentation. It’s hard to know what you don’t know when the relevant docs are spread over so many pages.

However, you do know the questions you need answers to in order to be confident that your function will perform at scale:

- Will it be able to handle my maximum projected throughput?

- Is there a ramp-up limit?

- How will my app be affected if the actual usage surpasses these limits?

- How can cold starts affect my Lambda function while under load?

In this article, I’ll show you how to answer all of these questions and help you to ensure that your VPC-enabled function is optimally configured for scalability.

Don’t care about understanding the “why” and just want to know the “how”? Jump straight to the action plan.👇

Overview of Lambda scaling

Let’s first look at the scaling behaviour of Lambda functions:

AWS Lambda will dynamically scale capacity in response to increased traffic, subject to your account’s Account Level Concurrent Execution Limit. To handle any burst in traffic, Lambda will immediately increase your concurrently executing functions by a predetermined amount, dependent on which region it’s executed.

To elaborate on what is meant by “scale capacity” here, under the hood each Lambda function is run inside a container (think Docker). A single container can handle sequential requests for several hours before Lambda eventually disposes of it. However, if another request comes in while one is in progress, a new container needs to be spun up to handle it.

The Account Level Concurrent Execution Limit is set to to 1,000 per region (although it can be increased by contacting AWS Support). This means that the upper limit of throughput for all Lambda functions in your account can be calculated as follows:

max_requests_per_second = 1000 / function_duration_seconds

So for a function which takes an average of 0.5 seconds to complete, the absolute maximum throughput that can you can achieve is 1000 / 0.5 = 2000 requests per second. (Although if you have other functions in your account also executing during this period, this limit will be lower).

Adding a VPC into the mix

When you connect your Lambda function to a VPC, scalability gets more complicated:

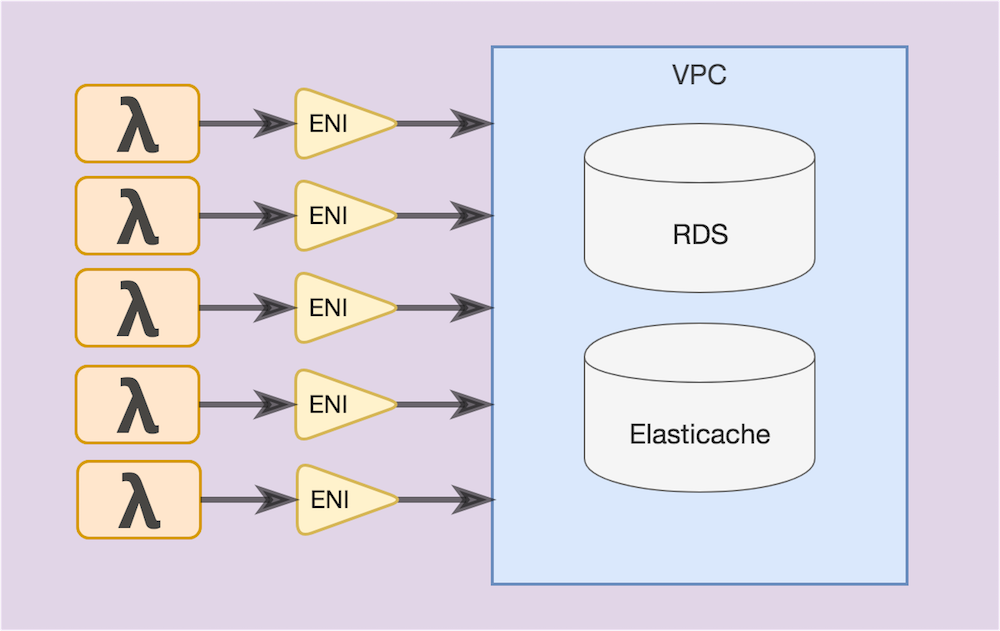

AWS Lambda runs your function code securely within a VPC by default. However, to enable your Lambda function to access resources inside your private VPC, you must provide additional VPC-specific configuration information that includes VPC subnet IDs and security group IDs. AWS Lambda uses this information to set up elastic network interfaces (ENIs) that enable your function to connect securely to other resources within your private VPC.

This requirement to set up an ENI between each function container and the VPC constrains the scalability of your Lambda function in 3 ways:

- There’s an upper limit on the number of ENIs that can be created (ENI capacity), thus capping the max achievable requests per second

- Cold start overhead is greater

- Ramp-up rate is capped

Constraint #1: ENI Capacity

The docs advise:

If your Lambda function accesses a VPC, you must make sure that your VPC has sufficient ENI capacity to support the scale requirements of your Lambda function.

… and they provide the following formula to tell you how many ENIs that your function will need:

ENI Capacity = Projected peak concurrent executions * (Memory in GB / 3GB)

So if your projected peak load is 1,000,000 requests/hr, your average function duration is 0.5 seconds and has 1GB of memory assigned to it, then the ENI capacity can be calculated as follows:

Peak concurrent executions = 1000000/(60*60) * 0.5 = 139

ENI Capacity = 139 * (1GB / 3GB) = 47

Calculating your actual ENI Capacity {#actual-eni-capacity}

But this formula doesn’t tell you how much ENI capacity you currently have available within your VPC, only how much your function needs to reach your projected peak load. Each ENI is assigned a private IP address from the IP address range within the subnets that are connected to the function. Therefore, in order to calculate the ENI capacity, we need to get a total of available IP addresses in all subnets.

To do this, you can use the VPC Console to list all the subnets in your VPC. Then get the value of the IPv4 CIDR column and plug it into the IP CIDR calculator to get the number of available IP addresses in that range. Do this for every subnet connected to your function and sum the values to get your total Actual ENI Capacity.

Constraint #2: Cold start overhead

All Lambda functions incur a “cold start”, which is the time taken for AWS to provision a container to service a function request. However, this impact is greater for VPC-enabled Lambda functions:

When a Lambda function is configured to run within a VPC, it incurs an additional ENI start-up penalty. This means address resolution may be delayed when trying to connect to network resources.

In addition to affecting the individual requests which incur the cold start, it will also affect your ramp-up rate as it effectively adds to your average function duration during a ramp-up period.

Constraint #3: Ramp-up rate

Ramp-up rate is the rate at which Lambda can provision new function containers to service an increase in requests to your function. The docs describe the ramp-up behaviour as follows:

To handle any burst in traffic, Lambda will immediately increase your concurrently executing functions by a predetermined amount, dependent on which region it’s executed. If the default Immediate Concurrency Increase value is not sufficient to accommodate the traffic surge, AWS Lambda will continue to increase the number of concurrent function executions by 500 per minute until your account safety limit has been reached or the number of concurrently executing functions is sufficient to successfully process the increased load.

The “Immediate Concurrency Increase” value is different for each region. For US East (N.Virginia), it is 3,000.

However, for VPC-enabled functions:

Because Lambda depends on Amazon EC2 to provide Elastic Network Interfaces for VPC-enabled Lambda functions, these functions are also subject to Amazon EC2’s rate limits as they scale.

You will need to use the EC2 Console to check the EC2 limits of your account, in particular the “Network interfaces per region” limit, which defaults to 350. If this value is less than the “Peak concurrent executions” figure for your function, then you will need to request that this limit is increased.

What happens when the concurrency limit is hit?

If your function reaches its concurrency limit, any further invocations to it are throttled. The throttling behaviour that Lambda applies is different depending on whether the event source is stream-based or not and whether it was invoked synchronously or asynchronously. For synchronously invoked, non-stream based event sources, the behaviour is:

Lambda returns a 429 error and the invoking service is responsible for retries. The ThrottledReason error code explains whether you ran into a function level throttle (if specified) or an account level throttle. Each service may have its own retry policy. Each throttled invocation increases the Amazon CloudWatch Throttles metric for the function.

So if you have a Lambda function behind an API Gateway, your web API clients will receive a 429 error.

Another item to be aware of is:

If your VPC does not have sufficient ENIs or subnet IPs, your Lambda function will not scale as requests increase, and you will see an increase in function failures. AWS Lambda currently does not log errors to CloudWatch Logs that are caused by insufficient ENIs or IP addresses.

Not having a log entry describing why this error is happening would be a pain to diagnose. The only indicator you will have that this is happening is the Invocation Errors CloudWatch metric increasing.

Your action plan {#action-plan}

So now you know how Lambda scales your VPC-enabled Lambda function, here’s a step-by-step guide to ensure your function is optimally configured for scalability:

- Calculate your

Peak Concurrent Executionswith this formula:

Peak Concurrent Executions = Peak Requests per Second * Average Function Duration (in seconds) - Now calculate your

Required ENI Capacity:

Required ENI Capacity = Projected peak concurrent executions * (Function Memory Allocation in GB / 3GB) - If

Peak Concurrent Executions>Account Level Concurrent Execution Limit(default=1,000), then you will need to ask AWS to increase this limit. - Configure your function to use all the subnets available inside the VPC that have access to the resource that your function needs to connect to. This both maximises

Actual ENI Capacityand provides higher availability (assuming subnets are spread across 2+ availability zones). - Calculate your

Actual ENI Capacityusing these steps. -

If

Required ENI Capacity>Actual ENI Capacity, then you will need to do one or more of the following:- Decrease your function’s memory allocation to decrease your

Required ENI Capacity. - Refactor any time-consuming code which doesn’t require VPC access into a separate Lambda function.

- Implement throttle-handling logic in your app (e.g. by building retries into client).

- Decrease your function’s memory allocation to decrease your

- If

Required ENI Capacity> your EC2Network Interfaces per regionaccount limit then you will need to request that AWS increase this limit. - Consider configuring a function-level concurrency limit to ensure your function doesn’t hit the ENI Capacity limit and also if you wish to force throttling at a certain limit due to downstream architectural limitations.

- Monitor the concurrency levels of your functions in production using CloudWatch metrics so you know if invocations are being throttled or erroring out due to insufficient ENI capacity.

-

If your Lambda function communicates with a connection-based backend service such as RDS, ensure that the maximum number of connections configured for your database is less than your

Peak Concurrent Executions, otherwise your functions will fail with connection errors. See here for more info on managing RDS connections from Lambda. (kudos to Joe Keilty for mentioning this in the comments)

Other articles you might enjoy:

Free Email Course

How to transition your team to a serverless-first mindset

In this 5-day email course, you’ll learn:

- Lesson 1: Why serverless is inevitable

- Lesson 2: How to identify a candidate project for your first serverless application

- Lesson 3: How to compose the building blocks that AWS provides

- Lesson 4: Common mistakes to avoid when building your first serverless application

- Lesson 5: How to break ground on your first serverless project